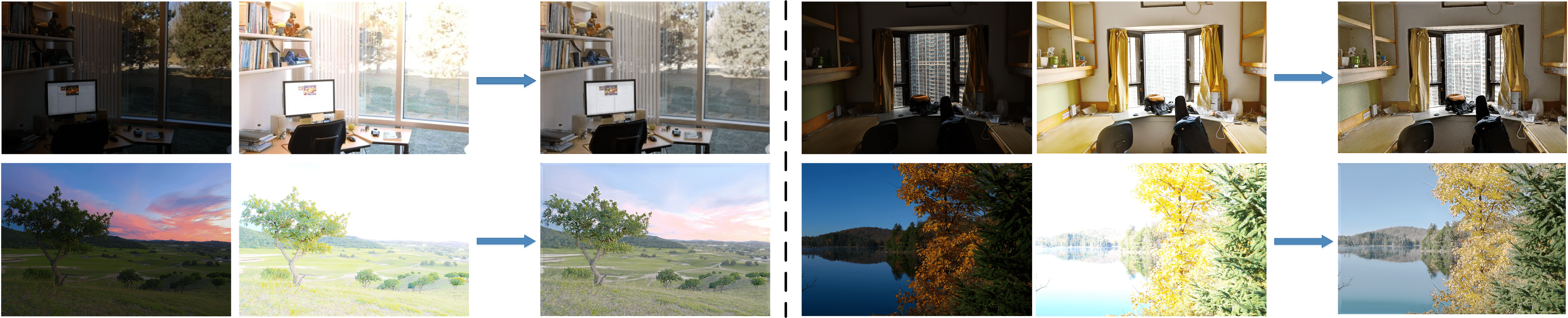

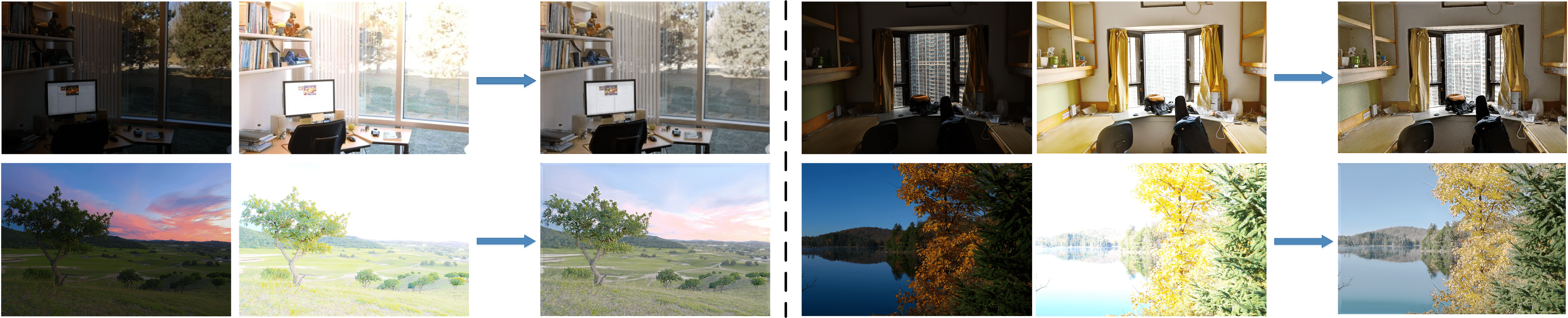

High dynamic range imaging requires fusing a set of low dynamic range (LDR) images at different exposure levels. Existing works combine the LDRs by either assigning each LDR a weighting map based on texture metrics at the pixel level or transferring the images into semantic space at the feature level while neglecting the fact that both texture calibration and semantic consistency are required. In this paper, we pro-pose a novel encoder-decoder network consisting of a con-tent prior guided (CPG) encoder and a detail prior guided (DPG) decoder for fusing the images at both the pixel level and feature level. Explicitly, the encoder constructed by the CPG layers includes the pyramid content prior to blend at the pixel level to transform the feature maps in the encoding layers. Correspondingly, the decoder comprises the DPG layers incorporated with the Laplacian pyramid detail prior to further boost the fusion performance. As the content and the detail priors are added to the network in a pyramid-structure manner, which provides fine-grained control to the features, both semantic consistency and texture calibration can be assured. Extensive experiments demonstrated the superiority of our method over existing state-of-the-art methods.